Contrastive Learning for Weakly Supervised Phrase Grounding

Jan Kautz Derek Hoiem

European Conference on Computer Vision (ECCV) . 2020 . Spotlight

Abstract

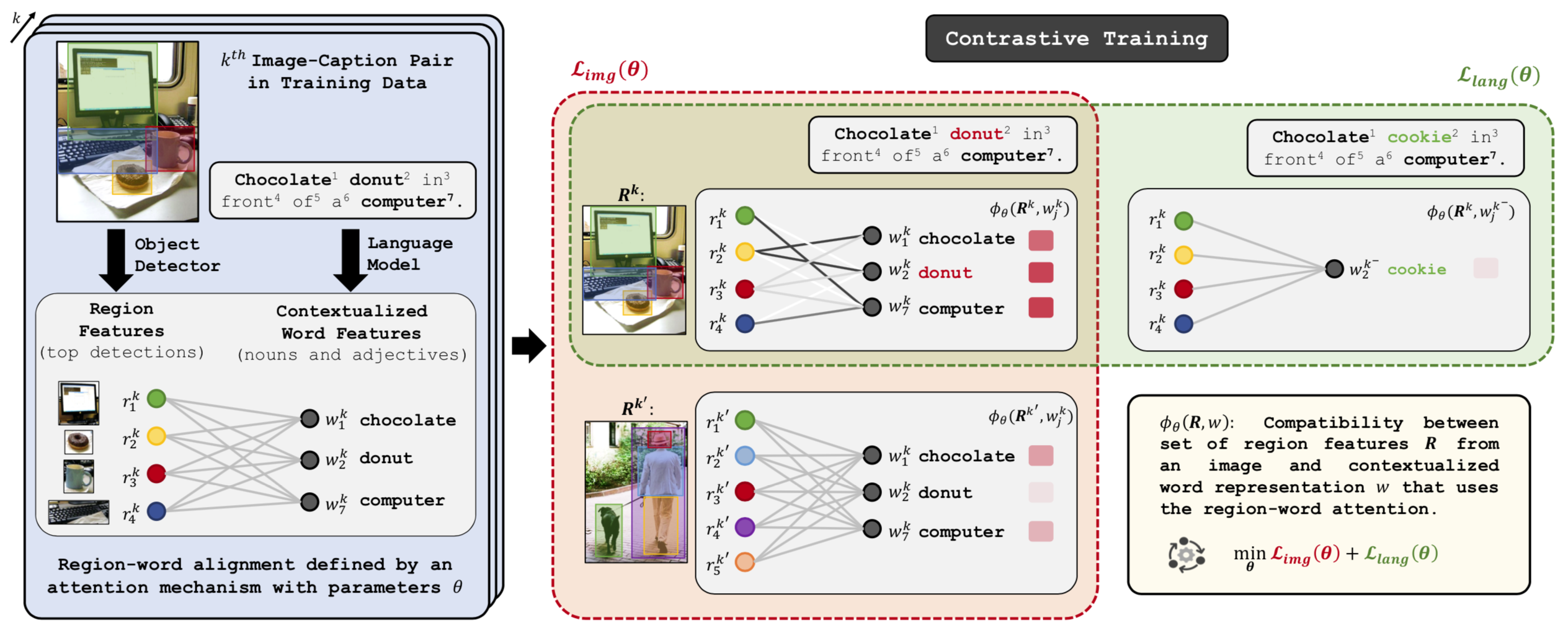

Phrase grounding, the problem of associating image regions to caption words, is a crucial component of vision-language tasks. We show that phrase grounding can be learned by optimizing word-region attention to maximize a lower bound on mutual information between images and caption words. Given pairs of images and captions, we maximize compatibility of the attention-weighted regions and the words in the corresponding caption, compared to non-corresponding pairs of images and captions. A key idea is to construct effective negative captions for learning through language model guided word substitutions. Training with our negatives yields a ~10% absolute gain in accuracy over randomly-sampled negatives from the training data. Our weakly supervised phrase grounding model trained on COCO-Captions shows a healthy gain of 5.7% to achieve 76.7% accuracy on Flickr30K Entities benchmark.

A quick overview …

A detailed look …

Randomly sampled qualitative results

In addition to the results included in the paper, here are some randomly selected results to help readers get a sense of performance qualitatively.

Bibtex

@article{gupta2020contrastive,

title={Contrastive Learning for Weakly Supervised Phrase Grounding},

author={Gupta, Tanmay and Vahdat, Arash and Chechik, Gal and Yang, Xiaodong and Kautz, Jan and Hoiem, Derek},

booktitle={ECCV},

year={2020}

}